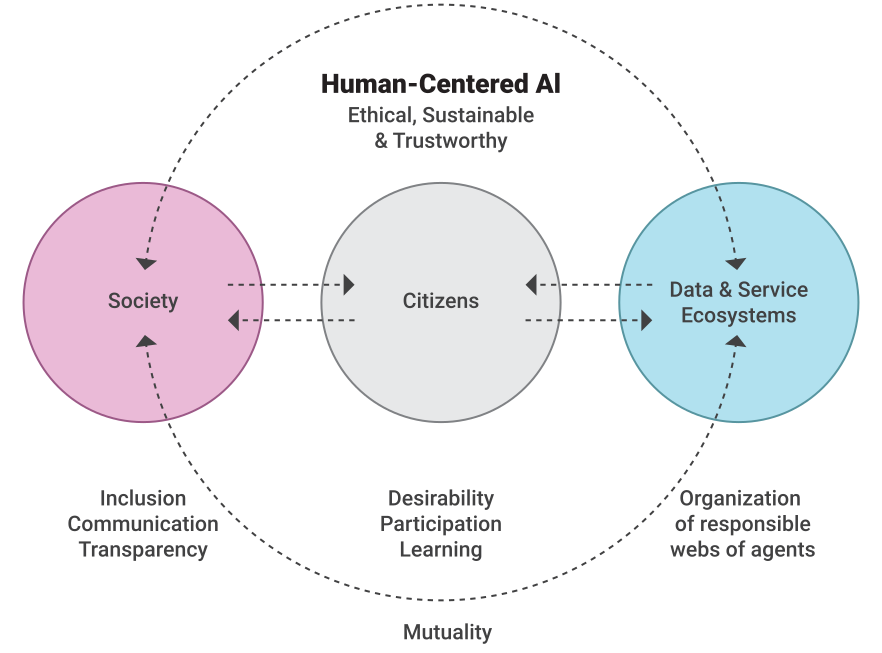

Human-Centered AI (HCAI) design ensures AI-driven solutions are aligned with human needs, behaviors, and ethics, integrating design thinking and data science to enhance user experiences. Unlike traditional Human-Centered Design (HCD), HCAI goes beyond usability to incorporate AI’s computational power while ensuring fairness, transparency, and interpretability.

The journey of AI began with rule-based expert systems and evolved into complex machine learning and deep learning models. Initially, AI systems were designed for efficiency and automation, often without considering human-centric factors such as transparency and fairness. However, the rise of ethical concerns, biased algorithms, and the lack of explainability has fueled the demand for a new approach—HCAI.

Unlike traditional AI, which emphasizes automation and data-driven optimization, HCAI prioritizes human needs and values. AI design has transitioned from merely improving computational efficiency to developing systems that work collaboratively with humans, ensuring decision-making processes remain accountable and inclusive.

| Aspect | Human-Centered Design (HCD) | Human-Centered AI (HCAI) |

| Focus | User needs & usability | User needs + AI capabilities |

| Design Approach | Iterative problem-solving | AI-driven co-creation |

| Decision-making | Human-driven | AI-augmented & human-guided |

| Primary Concern | Usability & accessibility | Fairness, transparency, & interpretability |

| Data Utilization | Limited | Extensive AI-driven insights |

Table 9.1: Human-Centred Design vs Human-Centered AI design

Why Human-Centered AI Matters

For AI to be effective and ethical, it must align with diverse human needs. Key pillars of HCAI in clude:

- Accessibility: AI should cater to people across different linguistic, educational, and technological backgrounds.

- Transparency & Trust: AI-driven decisions must be understandable to users, especially in high-stakes domains like healthcare, finance, and governance.

- Fairness & Bias Mitigation: AI models should be trained on diverse datasets to prevent discriminatory outcomes.

- Ethical Deployment: AI should support, rather than replace, human professionals, particularly in fields like education, healthcare, and legal systems.

Additionally, AI should empower users through interactive and explainable interfaces that promote trust and comprehension. Designing AI with a human-centric mindset ensures inclusivity, ethical responsibility, and social alignment.

Human-Centered AI vs. Traditional AI

Unlike traditional AI, which focuses on maximizing efficiency and automating tasks, HCAI emphasizes human-AI collaboration and ethical decision-making. Here are key differences:

- Education: Traditional AI automates grading and content delivery, while HCAI creates personalized learning platforms that adapt to individual students' needs.

- Healthcare: Traditional AI optimizes diagnosis and data processing, while HCAI ensures AI respects patient comfort, privacy, and emotional well-being.

- Finance: Traditional AI maximizes risk analysis for investments, while HCAI ensures transparency and prevents biased credit scoring.

- Transportation: Traditional AI focuses on developing fully autonomous vehicles, while HCAI enhances driver-assistance systems to improve safety and comfort.

- Customer Service: Traditional AI chatbots handle queries efficiently, whereas HCAI-powered assistants detect user frustration and escalate issues to human agents when necessary.

Fig 9.1: An overview of Human-Centered AI Design

Fig 9.1: An overview of Human-Centered AI Design

The Human-Centered AI Design Process

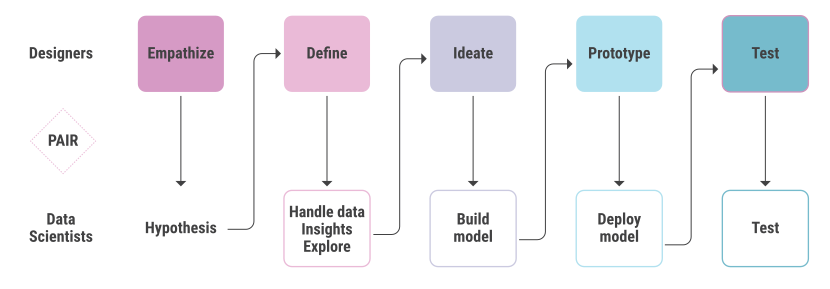

Stage 1: Empathize & Hypothesis

The Empathize & Hypothesis stage is where designers, engineers, and data scientists collaborate to:

- Understand user needs deeply

- Assess AI’s potential to enhance user experience

- Identify key opportunities where AI provides unique value

- Develop initial hypotheses about how AI should function

This phase relies on qualitative and quantitative insights to inform AI development, ensuring that technology does not dictate design but rather serves as an enabler of better human experiences.

Key Design Goals for Empathize & Hypothesis

To create user-centered AI solutions, teams should:

- Engage in User Research: Conduct qualitative interviews, surveys, and ethnographic studies to understand the motivations, pain points, and workflows of target users.

- Apply Design Thinking Techniques: Use methodologies such as Jobs-to-be-Done (JTBD) and User Journey Mapping to contextualize user challenges.

- Leverage AI Ideation Frameworks: Use tools like:

- AI Design Sprints to rapidly prototype AI concepts.

- AI Prompt Card Decks for brainstorming AI use cases.

- AI Canvases to visualize potential AI applications and associated risks.

- Assess Uncertainty & Risk: Categorize AI-driven decisions into levels of uncertainty

to mitigate risks early in the process:

- Low uncertainty → Low risk

- Medium uncertainty → Some risk

- High uncertainty → High risk

Pairing Designers with Data Scientists

A significant shift in AI development is the close collaboration between designers and data scientists. This partnership ensures that:

- Designers bring human insights to data science models.

- Data scientists align their models with real-world user needs.

- AI solutions are designed with a balance between automation and augmentation.

A notable example of this approach is IBM’s AI Fairness 360 Toolkit, which helps designers and data scientists detect and mitigate biases in AI models collaboratively.

Optimizing AI: Precision vs. Recall

When developing AI models, teams must decide whether to prioritize precision or recall:

- High Precision → Reduces false positives but may miss relevant cases.

- High Recall → Captures all relevant cases but may include false positives.

For example, in a medical diagnosis AI, it’s more critical to prioritize recall to avoid missing potential cancer patients (false negatives). In contrast, for fraud detection AI, prioritizing precision may be better to prevent blocking legitimate users.

Using frameworks like the Google People + AI Guidebook, teams can design reward functions that balance these trade-offs effectively.

Stage 2: Define

In the Define phase, teams refine the problem statement based on insights from Stage 1. This phase involves:

- Synthesizing research data to define user pain points.

- Creating personas to represent user types.

- Developing a problem statement that captures the core challenge AI aims to solve.

- Identifying AI opportunities within constraints like ethical considerations, feasibility, and regulatory compliance.

A clear problem definition ensures that AI solutions remain focused and impactful, preventing scope creep.

Stage 3: Ideate

The Ideate phase encourages brainstorming multiple solutions. Methods used include:

- Storyboarding AI Interactions: Mapping user journeys to visualize AI integration points.

- Sketching AI Workflow Models: Conceptualizing AI behaviors and outputs.

- Using AI-Specific Brainstorming Tools: Google’s People + AI Guidebook provides frameworks for AI-specific ideation.

Teams should generate diverse ideas before narrowing down the best solutions based on feasibility, user impact, and ethical considerations.

Stage 4: Prototype

The Prototype phase involves creating tangible AI-powered experiences. Approaches include:

- Wizard of Oz Testing: Simulating AI behavior manually before full-scale implementation.

- Low-Fidelity Mockups: Using tools like Figma or Sketch for early UI/UX prototyping.

- Building AI Proof-of-Concepts (PoCs): Developing small-scale AI models for usability testing.

Rapid prototyping allows teams to validate AI assumptions early, reducing development risks.

Stage 5: Test

The Test phase ensures AI aligns with user needs through:

- User Testing with AI Prototypes: Gathering real-world feedback.

- Bias & Fairness Audits: Using tools like Microsoft’s Fairlearn to detect biases.

- Iterative Refinements: Improving AI interactions based on testing insights.

Fig 9.2: Human-Centered AI Design Process

Fig 9.2: Human-Centered AI Design Process

Trends & Challenges in HCAI

- Ethical AI Governance: Organizations are developing AI ethics committees to oversee design and implementation.

- Adaptive AI Systems: AI that evolves based on user interactions and real-time feedback.

- Regulatory Challenges: Navigating GDPR, AI Act, and other compliance frameworks.

As AI continues to shape our world, integrating human-centered principles into AI development is not just a best practice—it is essential for creating technology that empowers people and enriches lives.

Conclusion

The Empathize & Hypothesis phase in Human-Centered AI Design sets the foundation for AI solutions that are not only technically robust but also deeply aligned with human needs. By fostering collaboration between designers and data scientists, organizations can:

- Develop AI that is more intuitive, ethical, and impactful.

- Reduce risks associated with bias and poor user experiences.

- Create AI-driven services that truly enhance, rather than replace, human capabilities.

As AI continues to shape our world, integrating human-centered principles into AI development is no longer optional—it is essential for creating technology that empowers people and enriches lives.

Contributors / Authors

Mohan Das ViswamDy. Director General & HoG mohandas@nic.in

- Tag:

- Internet

- Technology

- eGov

- Tech

Mohan Das Viswam

Deputy Director General & HoG

National Informatics Centre HQ

Room #379, A4B4, A block, CGO Complex

Lodhi Road, New Delhi - 110003