Generative AI is experiencing unprecedented growth and adoption across diverse industries, fundamentally transforming organizational operations and the landscape of creativity and innovation. This technology, rooted in advanced machine learning algorithms, leverages vast datasets to autonomously generate original text, images, audio, video, and even more complex outputs. It goes beyond mere automation—generative AI enhances human capabilities, reshaping workflows and pushing boundaries in productivity, customer service, product design, content creation, research, and even data synthesis.

The skills and knowledge required to work with generative AI have become crucial in the modern workforce as businesses increasingly invest in tools and platforms to boost creativity, streamline efficiency, and drive competitive advantage. With applications across sectors like healthcare, finance, media, and education, professionals with expertise in generative AI are now pivotal in optimizing operations and developing transformative solutions.

A cornerstone of this evolution is the Transformer architecture, a neural network model that has shifted the paradigm of natural language processing and AI model efficiency. Transformers excel at interpreting language context, understanding not just the meaning of individual words but also their relationships within entire phrases and sentences. Unlike older models that process information sequentially, Transformers analyze all parts of an input simultaneously, making them faster, highly scalable, and well-suited for parallel processing on GPUs. This simultaneous approach has facilitated the emergence of foundation models like GPT, BERT, and others, which serve as adaptable, general-purpose models that can be fine-tuned for specific tasks.

Generative AI techniques are diverse, with each model family serving distinct functions. GANs (Generative Adversarial Networks), for example, have proven adept at creating hyper-realistic images by pitting two networks against each other in a “creative rivalry,” whereas VAEs (Variational Autoencoders) are well-suited for data compression and synthesis, generating smooth data representations that are invaluable for tasks like anomaly detection and recommendation systems. These models do not serve as one-size-fits-all solutions. Instead, their impact lies in carefully integrating them into tailored applications that fit specific industry needs. For instance, while Transformers have revolutionized.

text and language-based applications, GANs have unlocked new levels of visual realism for media and entertainment industries, and VAEs are proving valuable in healthcare for generating synthetic patient data to advance medical research while safeguarding patient privacy. Generative AI holds the potential for profound industry shifts, not only enabling businesses to innovate at scale but also empowering them to discover new possibilities and operate more inclusively and effectively. As these models continue to evolve, so too will the breadth of generative AI’s applications—ushering in a future where creativity, innovation, and efficiency intersect, reshaping the digital world in remarkable and unforeseen ways.

What’s AI/ML and Deep Learning?

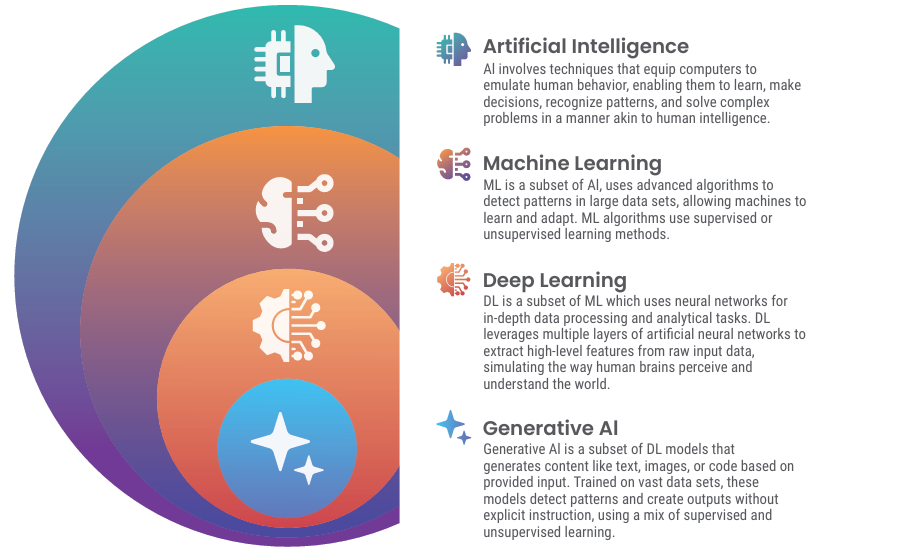

Artificial Intelligence (AI) refers to a broad set of technologies that empower machines to perform tasks traditionally requiring human intelligence. Two significant subfields within AI—Machine Learning (ML) and Generative AI—have particularly gained attention for their transformative capabilities.

AI and Machine Learning Artificial Intelligence (AI): AI involves simulating human-like intelligence in machines, enabling them to think, learn, reason, solve problems, understand natural language, and interpret sensory information. This broad concept underpins many advancements that are reshaping industries today. Machine Learning (ML): ML is a specialized subset of AI that focuses on creating algorithms capable of making data-driven predictions. Rather than being explicitly programmed for each task, ML models use statistical methods to identify patterns in data and improve performance over time. Common use cases include recommendation engines, fraud detection systems, and predictive analytics.

Deep Learning Deep learning, a sophisticated subfield of ML, uses artificial neural networks with multiple layers to analyze and interpret complex data. These deep architectures excel at tasks like image and speech recognition, as they can autonomously extract features from raw data without the need for manual feature engineering. This automation has led to significant advancements in fields requiring pattern recognition and data analysis.

Generative AI Generative AI, a subset of AI that often utilizes deep learning, is dedicated to creating new content that mirrors the data it has been trained on. This includes the generation of images, videos, text, and audio that are novel yet bear a resemblance to the original input data. Models such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) are specifically designed to learn and replicate the patterns within input data distributions, producing creative outputs that are both innovative and contextually relevant.

Evolution of Generative AI

Early 1950s: Foundations of Machine Learning and Neural Networks

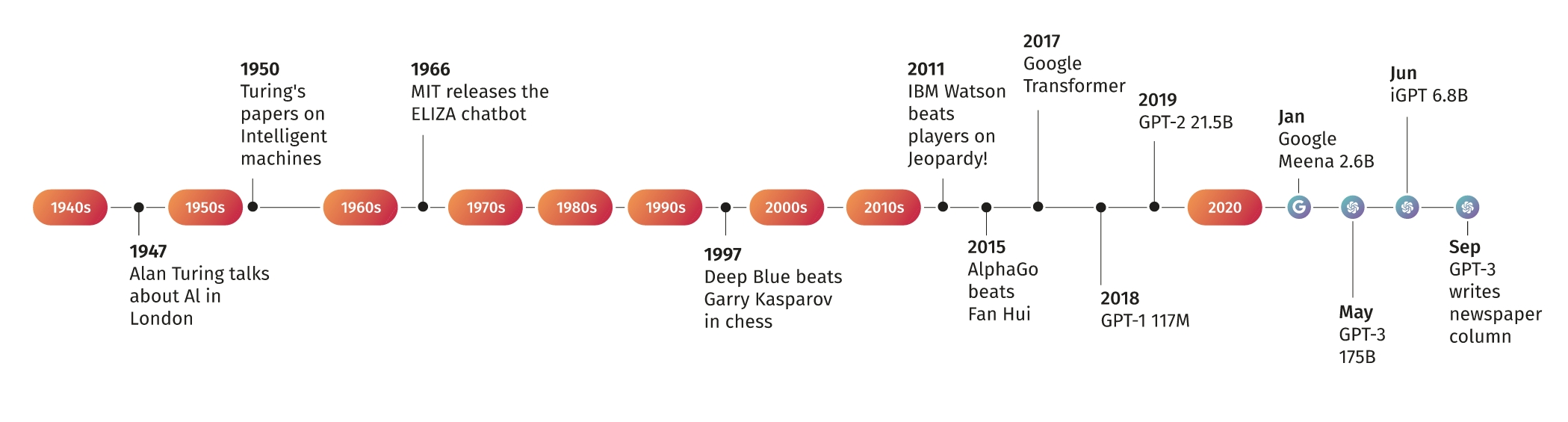

Generative AI has its roots in the early days of machine learning and deep learning research. In 1952, Arthur Samuel created the first machine learning algorithm, designed to play checkers, which laid the groundwork for self-improving programs and introduced the term “machine learning.” Shortly after, in 1957, Frank Rosenblatt, a psychologist at Cornell University, developed the Perceptron. This was the first “neural network” capable of learning, consisting of a single layer of perceptrons with adjustable weights and thresholds. Although the Perceptron demonstrated some learning capabilities, it struggled with more complex tasks due to its limited architecture, ultimately stalling progress in the field.

1960s–1970s: The Rise of Early AI Programs and Pattern Recognition

During the 1960s, AI research expanded to include early generative applications. In 1961, Joseph Weizenbaum developed ELIZA, a program that used basic natural language processing to emulate a Rogerian psychotherapist, marking a breakthrough in conversational AI. ELIZA used pattern matching and substitution methodologies to simulate empathetic responses, a foundational concept for later chatbot development. In parallel, computer vision and pattern recognition research gained traction, with Ann B. Lesk, Leon D. Harmon, and A. J. Goldstein advancing facial recognition technology in 1972. Their study, “Man-Machine Interaction in Human-Face Identification,” identified 21 unique markers (such as lip thickness and hair color), establishing a framework for automatic facial recognition systems. In 1979, Kunihiko Fukushima proposed the Neocognitron, the first deep-learning neural network with a multilayer, hierarchical structure designed for visual pattern recognition, particularly for handwritten characters. This architecture inspired later convolutional neural networks (CNNs), laying the groundwork for advanced image recognition.

1990s: The Impact of Gaming and Graphics Processing on AI Development

The gaming industry inadvertently accelerated generative AI advancements in the 1990s, particularly through the development of highpowered graphics cards. With Nvidia’s release of the GeForce 256 in 1999—the first GPU capable of advanced graphical computations—researchers found that GPUs could efficiently process neural networks, speeding up AI training. The parallel processing power of GPUs soon became indispensable in AI research, particularly for tasks that required large-scale data processing and complex pattern recognition.

2000s: Smarter Chatbots and the Rise of Generative Networks

The early 2000s saw the emergence of more sophisticated virtual assistants, starting with Apple’s release of Siri in 2011 as the first digital virtual assistant capable of executing voice commands. Meanwhile, in 2014, a landmark advancement came with the introduction of Generative Adversarial Networks (GANs) by Ian Goodfellow. GANs consist of two neural networks—the generator and the discriminator— that work in tandem to produce highly realistic images, videos, and audio by learning from real-world data. GANs have since been used extensively in creative fields, from deepfake technology to digital art.

2018–Present: The Transformer Architecture and Modern Generative

AI The Transformer architecture, introduced in 2017, marked a significant leap in natural language processing and generative AI. Unlike recurrent neural networks (RNNs) and long short-term memory (LSTM) models, Transformers analyze all parts of an input simultaneously rather than sequentially. This parallel processing approach, combined with self-attention mechanisms, enables Transformers to capture intricate language nuances. Google’s BERT (Bidirectional Encoder Representations from Transformers), developed in 2018, exemplifies this advance, allowing deeper contextual understanding in natural language tasks. BERT is widely used for applications such as search engine optimization, chatbots, virtual assistants, sentiment analysis, and topic classification. In 2022, OpenAI introduced ChatGPT, a generative language model built on Transformers, marking a turning point in the field. Powered by large language models, ChatGPT demonstrated unprecedented capabilities in conversational AI, handling tasks such as writing, research, coding, and creating realistic multimedia content. ChatGPT’s abilities have expanded AI’s reach across industries, from customer service and content creation to research and education. Despite its transformative impact, generative AI models like ChatGPT face scrutiny over “hallucinations”—the generation of plausible but incorrect information—a limitation researchers are actively working to address.

The Future: Generative AI as a Core Pillar of Innovation

Generative AI continues to evolve, promising to reshape industries by enabling more intuitive user experiences, automating complex tasks, and unlocking creative potential. The trajectory from early machine learning algorithms to today’s advanced generative systems illustrates an accelerating pace of innovation, with each decade building on previous breakthroughs. As generative AI tools become more accessible and refined, they are poised to empower a new era of creativity, productivity, and exploration across every sector.

Recent Advancements

Language Processing

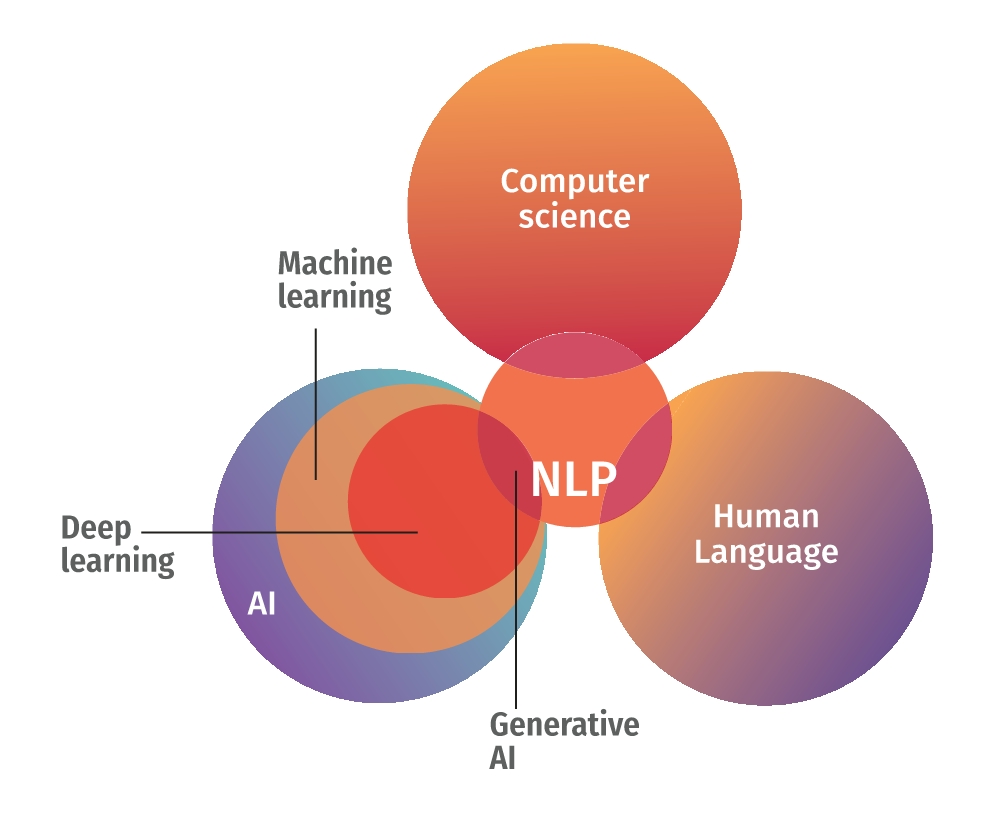

Natural Language Processing (NLP) is a core branch of artificial intelligence that focuses on the interaction between computers and human language. Generative AI has significantly advanced NLP applications, enabling systems to understand context, compose coherent language, and hold meaningful conversations. Generative AI models, such as GPT, excel in producing coherent and contextually relevant content. NLP in generative AI is crucial for recognizing the nuances of spoken or written language. These NLP tools enhance generative AI’s ability to comprehend user inputs and process human language, generating appropriate and meaningful responses or content. NLP is a transformational technology that improves human-computer interaction across a range of applications. From chatbots to virtual assistants, NLP facilitates seamless communication between humans and machines, making it a vital component of the digital world. As technology evolves, NLP’s capabilities will continue to expand, paving the way for more sophisticated applications in fields like healthcare, finance, and education

Various Models Available in the Market

Several generative AI models have emerged, each with unique capabilities. Known for their ability to generate large amounts of written content, LLMs (Large Language Models) are highly versatile and can handle tasks ranging from text generation to language translation on a broad scale. Examples include Google’s Gemini, GPT-4, Claude Opus, and Llama3. OpenAI’s GPT (Generative Pre-trained Transformer): This model excels in natural language processing tasks like text creation, summarization, and translation.

- GPT-1: Launched in 2018, this was the first large-scale model, laying the groundwork for future advancements.

- GPT-2: Released in 2019, it featured increased scale and enhanced capabilities.

- GPT-3: Introduced in 2020, it demonstrated the ability to produce human-like text.

- GPT-3.5: Released in 2022, it offered improved context generation and text editing features.

- GPT-4: Launched in 2023, it can process both text and images, offering better reasoning and contextual understanding.

Multimodal Models

These models accept various input types, such as text and images, to generate versatile outputs like text, images, and audio. Examples include DALL-E, Midjourney, and Stable Diffusion.

- OpenAI’s DALL-E: Generates images from textual descriptions, showcasing the ability to create visual content from language input.

- Stable Diffusion: A widely used model for generating high-quality images based on text prompts.

- Gemini: Developed by Google, this multimodal architecture can process context, images, audio, and video.

- Project Astra:An ongoing Google project focused on creating AI agents that enhance NLP, complex reasoning, and productivity tools.

Neural Radiance Fields (NeRFs)

A powerful generative AI tool for creating 3D imagery from 2D images. NeRFs are increasingly used in virtual reality, computer graphics, and related fields.

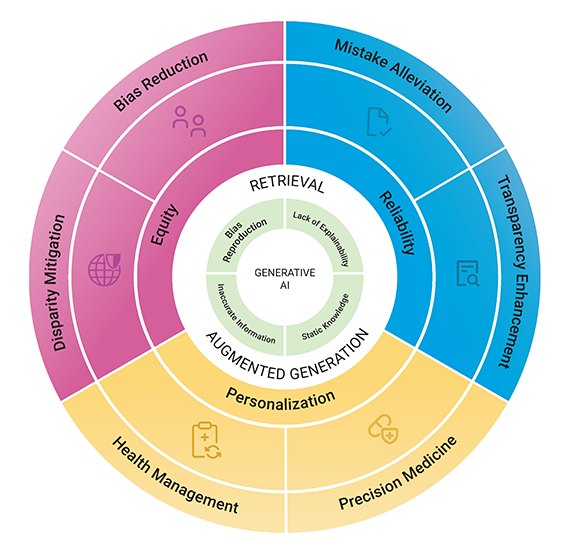

RAG (Retrieval-Augmented Generation)

RAG combines retrieval-based techniques with generative models to improve the generation process. It retrieves data from external databases or publications to provide more accurate and relevant responses. This approach leverages real data to enhance the reliability of generated content.

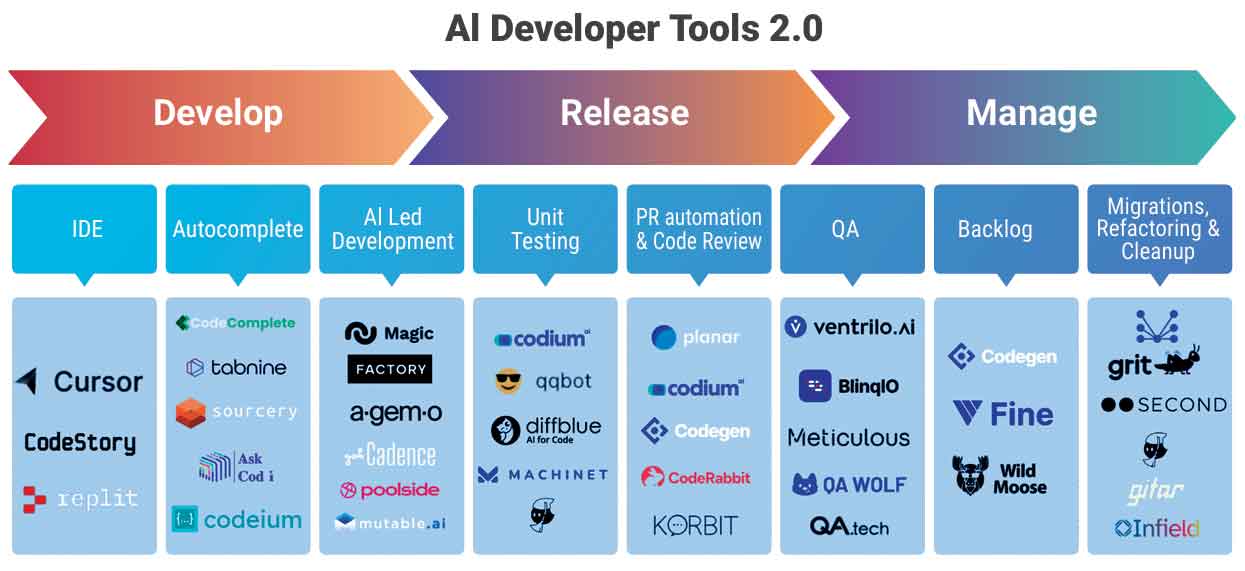

Coding and Generative AI

Generative AI is rapidly being integrated into software development processes. Tools like GitHub Copilot use generative models to assist developers by suggesting code snippets based on comments or existing code. This helps improve coding efficiency and reduce errors through context-aware suggestions. Generative AI is transforming software development by automating coding tasks, fostering creativity, and boosting efficiency. It uses machine learning models to generate new content, including code, based on patterns found in existing datasets. Here are some key effects of generative AI on coding and software development:

- Quality Control: Generated code may not always meet quality or security standards, requiring human review and validation before deployment.

- Dependence on Data: The effectiveness of generative AI relies heavily on the quality and diversity of the training data. Poor data quality can lead to subpar outcomes.

- Ethical Considerations: Like any AI system, generative AI must address ethical concerns, such as intellectual property rights and biases in generated outputs

Indian Scenario

AI and generative technologies are rapidly gaining traction in India, with applications spanning sectors like healthcare, banking, education, and entertainment. Startups are using generative AI for content creation, automating customer service, and implementing customized marketing strategies. The government is also encouraging programs that promote AI research and development. Many people in India have used ChatGPT, a chatbot developed by OpenAI, which is built on large language models (LLMs). These models interpret and generate content using massive datasets, primarily trained on English data worldwide. However, several Indian companies are taking a different approach. They are developing regional and Indic language models, both large and small, to cater to the linguistic diversity of the country. Smaller language models require fewer but more specialized datasets, which are easier to source locally. Key players in this space include Bhavish Aggarwal’s Krutrim, Tech Mahindra’s Indus project, AI4Bharat’s Airawat series, Sarvam AI’s OpenHathi series, CoRover.ai’s BharatGPT, and SML India’s Hanooman LLM series. Other notable generative AI initiatives in India include The Nilekani Center at AI4Bharat, Sarvam AI, Project Vaani (a collaboration between AI and Robotics Technology Park at IISC and Google), Ozontel (cloud-based communications), and Swecha Telangana at the Indian Institute of Information Technology-Hyderabad, among others.

NIC & MeitY Initiatives

The Ministry of Electronics and Information Technology (MeitY) has initiated the implementation of a ‘National Programme on AI,’ which includes four main components: Data Management Office, National Centre for AI, Skilling on AI, and Responsible AI. The ‘IndiaAI’ framework complements this program by providing a focused and comprehensive approach to address specific gaps in the AI ecosystem. As one of the largest Global South economies leading the AI race, India has been appointed as the Council Chair of the Global Partnership on AI (GPAI), securing more than two-thirds of first-preference votes. IndiaAI’s mission-centric strategy emphasizes bridging gaps in AI infrastructure, including compute resources, data availability, financing, research, innovation, targeted skills development, and institutional data management. This comprehensive approach is crucial for maximizing AI’s impact on India’s progress. To further strengthen its AI capabilities, India is establishing multiple Centres of Excellence, developing a national dataset platform, setting up a Data Management Office for governance, and exploring design approaches for indigenous AI chipsets. Moving forward, MeitY plans to establish a compute center with 10,000 GPUs, providing 40 Exaflops of computational power and approximately 200 PB of storage. The focus will be on prioritizing AI use cases in Governance, Agriculture, Health, Education, and Finance.

Centre of Excellence in Artificial Intelligence (NIC Initiatives)

NIC took a significant leap in advancing AI in governance by establishing the Centre of Excellence in Artificial Intelligence in 2019. This pioneering initiative aims to explore, develop, and implement AI applications specifically tailored to enhance public services and governance structures in India. At its core, the center focuses on model building in critical areas such as Image and Video Analytics, Speech Synthesis and Recognition, and Natural Language Processing, each geared toward creating solutions that streamline citizen services and improve administrative efficiencies. To make AI accessible and scalable for various government agencies, NIC launched AI as a Service (AIaaS) on its Meghraj Cloud in January 2021. This service allows agencies to tap into AI capabilities without needing to develop or manage complex infrastructure. Here is a comprehensive overview of NIC’s key AI initiatives that are driving transformation in Indian governance:

- AI - Manthan: A versatile platform for creating, training, and testing AI-based deep learning models. It simplifies the model development process, making it easier for developers to design effective solutions.

- AI - Tainaatee: An inference testbed specifically designed for deploying models created in AI – Manthan. Once models are trained, Tainaatee allows them to be tested for real-world deployment, ensuring that they are production-ready. This testbed optimizes model performance for practical applications in government projects.

- AI - Tippanee: Annotation tools and services essential for building reliable AI models. AI – Tippanee provides a suite of tools for tagging, labeling, and preparing data, which is a crucial step in the machine learning process, especially in supervised learning scenarios.

- AI - Satyapikaanan (Face Recognition as a Service - FRAAS): Satyapikaanan offers API-based

face recognition and liveness detection services.

This technology has enabled numerous e-governance applications, such as:

- Life Certificate Verification for pensioners in Meghalaya.

- Faceless Services in Regional Transport Offices (RTOs) for convenient public service access.

- Attendance Tracking for skill development trainees under the Ministry of Minority Affairs.

- AI - ParichayID: An API service dedicated to verifying and matching form details accurately. This tool helps in validation processes where user-submitted information must be cross-checked for accuracy and completeness, supporting initiatives that require secure and verified data submissions.

- AI - VANI (Virtual Assistance by NIC): VANI encompasses chatbots, voicebots, and transliteration tools, provided via the Meghraj Cloud. As

a highly adaptable AI service, VANI has been instrumental in supporting multiple government

projects:

- NIC has deployed 20 chatbots, including for services like eWayBill and iKhedut (a portal for farmers), some of which offer multilingual support.

- Additionally, eight bilingual voice support services, including for PM-Kisan and PM-Kusum, serve diverse citizen groups, enabling more inclusive access to government services across multiple states and ministries.

- AI - Vividh: A customizable AI

application development service

that also supports compute-only

requests. Vividh is responsible for

various specialized projects, such as:

- SwachhAI, an automated toilet seat detection tool, supporting sanitation efforts under Swachh Bharat Urban.

- Cognitive Search Tools for motor accident claim cases within eCourts, making case information retrieval faster and more efficient for legal and administrative personnel.

- AI - Prabandhan: A comprehensive framework for managing the lifecycle of AI models, including retraining, backup, infrastructure scaling, and disaster recovery. Prabandhan ensures that AI systems are not only functional but also sustainable, allowing continuous updates to keep models relevant and effective as data and requirements evolve.

BHASHINI Initiative

BHASHINI aims to break language barriers, making digital services accessible in local languages using voice-based technology. Launched in July 2022 by Honorable PM Shri Narendra Modi under the National Language Technology Mission, BHASHINI provides translation services for twenty-two scheduled Indian languages. Key features include automatic speech recognition, neural machine translation, text-to-speech conversion, language detection, and voice activity detection. This initiative holds the promise of bridging both linguistic and digital divides, empowering every citizen to engage with technology seamlessly

Challenges of Generative AI

Despite its immense potential, generative AI faces several significant challenges:

- Hallucination: Generative models often rely on patterns from training data without real-world validation, producing outputs that may seem plausible but are incorrect or nonsensical. These AI hallucinations, particularly prevalent in large language models (LLMs), undermine the reliability and trustworthiness of AI systems. While generative AI can present content that appears factual, it often lacks true understanding, and inaccuracies in training data exacerbate this issue. Furthermore, generative AI can easily create misleading content, such as fake news and deepfakes. Since models are designed to generate convincing outputs even without a factual basis, all AI-generated content should be independently verified for accuracy.

- Bias: Bias in AI arises from systematic favoritism embedded in AI systems, leading to skewed outcomes or uneven treatment. This issue can manifest in critical areas like hiring, lending, criminal justice, and healthcare. As AI becomes more integrated into decision-making processes, it is crucial to identify and address these biases to ensure fairness and equity.

- Limitations: Despite rapid advancements, AI technology still has fundamental limitations that restrict its effectiveness across various fields. Understanding these constraints is essential for developers, businesses, and policymakers to make informed decisions about AI adoption and deployment.

- Privacy and Regulation: The widespread use of AI raises serious privacy concerns, necessitating comprehensive legal frameworks to protect personal data. As AI systems become more common, individuals, organizations, and regulators must be aware of the implications for data privacy and establish robust safeguards

- Carbon Footprint: Training generative AI models requires substantial electricity, contributing to significant carbon emissions. For instance, training ChatGPT-4 is estimated to consume between 51,772 and 62,318 megawatt hours of electricity, resulting in CO2 emissions ranging from 1,035 to 14,994 metric tonnes. These environmental impacts vary based on the geographical location of data centers. To put this into perspective, a 3,000-mile round-trip flight from London to Boston produces roughly one metric tonne of carbon dioxide.

- Copyright & Ownership: Generative AI often produces content that mimics or summarizes existing material, sometimes without the original creators’ consent. This raises issues of copyright, intellectual property, and ownership, especially given the lack of clear legal guidelines in this evolving field. Users should exercise caution and avoid feeding copyrighted or sensitive personal information into AI tools, as such data could be absorbed into training datasets and misused in unintended or unethical ways.

Way forward

To use generative AI responsibly, it is essential to:

- Prioritize Ethical Considerations: Ensuring transparency in model training methods and proactively addressing biases is crucial for ethical AI development.

- Pursue Cross-Disciplinary Collaboration: Advancing toward real Artificial General Intelligence (AGI), where machines demonstrate human-like cognitive abilities across diverse tasks, will require continuous research and collaboration across various fields.

In summary, while generative AI holds the promise to transform numerous industries, its long-term success will depend on addressing challenges through strong ethical standards.

Contributors / Authors

V. Uday Kumar Dy. Director General & HoG uday.kumar@nic.in

- Tag:

- Internet

- Technology

- eGov

- Tech

V. Uday Kumar

Deputy Director General & HoG

National Informatics Centre, A Block

CGO Complex, Lodhi Road, New Delhi - 110003